In our previous articles, we showed you how to integrate chat completions from OpenAI to your .NET apps. While this is super useful for single purpose tasks (like categorizing products or summarizing data), things get really interesting when you start to ask yourself:

What if our AI systems like OpenAI could perform actions meaningful to our business?

When it comes to agentic AI, this is truly where the rubber meets the road - and software engineers in particular have a leg up because most of the hard work has been done for you. Integrating natural language requests made by users...

It's not just about making chatbots for your customers. You can use LLMs to do things like:

- Run an automated process to categorize data meaningful to your business.

- Automatically fill in invoices with unstructured data such as invoices.

For .NET, it all starts with the OpenAI integrations we've already discussed, but the next step is using Semantic Kernel.

What IS Semantic Kernel?

From Microsoft's docs:

Semantic Kernel is a lightweight, open-source development kit that lets you easily build AI agents and integrate the latest AI models into your C#, Python, or Java codebase. It serves as an efficient middleware that enables rapid delivery of enterprise-grade solutions.

That's a lot of words for what it really is - a useful abstraction for helping create and integrate AI systems into our codebase, whether it be old or new.

Watch the Video "AI Calling Your Code? Integrating OpenAI & .NET w/ Semantic Kernel

Getting started

Let's pretend we have a business where we are responsible for booking restaurant reservations for our clients online (think OpenTable or Tock). Imagine we wanted to create a chatbot for people to book reservations to our partner restaurants.

Let's start off with some NuGet packages. Open your favorite IDE and install the Microsoft.SemanticKernel.Connectors.OpenAI NuGet package in your .NET solution.

(If you want to skip ahead and see the full solution, you can find all of the completed code here: https://github.com/AvironSoftware/dotnet-ai-examples/tree/main/IntroToSemanticKernel)

First thing's first - we need to construct our Semantic Kernel object using our OpenAI API key:

using IntroToSemanticKernel;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using ChatMessageContent = Microsoft.SemanticKernel.ChatMessageContent;

var openAIApiKey = ""; //add your OpenAI API key here

var model = "gpt-4o";

//where Semantic Kernel gets built

var semanticKernelBuilder = Kernel.CreateBuilder();

semanticKernelBuilder.AddOpenAIChatCompletion(model, openAIApiKey);

Kernel semanticKernel = semanticKernelBuilder.Build();

//where we get our chat client

var chatClient = semanticKernel.GetRequiredService<IChatCompletionService>();

This is relatively straightforward - we create the Kernel builder object, add OpenAI chat completions to the builder, then build it and get a copy of our chat completions client. This will allow us to use the OpenAI API to chat with ChatGPT within our application.

Next we'll setup the loop to store and alter our chat history:

var chatHistory = new ChatHistory(new List<ChatMessageContent>

{

new ChatMessageContent(AuthorRole.System, "You are a helpful restaurant reservation booking assistant.")

});

Console.WriteLine("Say something to OpenAI and book your restaurant!");

while (true)

{

var line = Console.ReadLine();

if (line == "exit")

{

break;

}

chatHistory.Add(new ChatMessageContent(AuthorRole.User, line));

var response = await chatClient.GetChatMessageContentsAsync(

chatHistory

);

var chatResponse = response.Last();

Console.WriteLine(chatResponse);

chatHistory.Add(chatResponse);

}Pretty straightforward- all we're doing is:

- Setting up our initial chat history with a system message that tells OpenAI how it should behave.

- Starting a chat loop that allows us to send and receive chat completions to the OpenAI API.

- Adding that message back to our ChatHistory object after the completion is done.

This looks very similar to our OpenAI chat completion code already - which it pretty much is!

But we want ChatGPT to interact with our data. To that end, we'll introduce plugins.

Introducing Semantic Kernel plugins

Plugins are how we bridge our code with OpenAI and give OpenAI's chat completion service the ability to call our own code. How would ChatGPT do that without Semantic Kernel?

- We give ChatGPT a list of "tools" available. A tool is simply a function definition - the name of a function and any parameters that the function has. (Think of a function like get_stock_ticker() that has a parameter to search for stock tickers based on a company name.)

- We make a request to the ChatGPT chat completion API. (I want stock tickers for any company with Acme in the title.)

- ChatGPT decides whether or not to call that function based on the natural language of the request. (ChatGPT would respond by calling that function and fill the parameter in with a value that it thinks is correct, likely Acme.)

- The function call doesn't happen automatically - ChatGPT sends back a JSON blob that contains information on our function call. (You can see this in more detail here.)

Luckily, we don't have to worry about ANY of that - Semantic Kernel takes care of all of that for us.

We'll define a very simple class that will be OpenAI's interface into our code. The [KernelFunction] attribute is what tells Semantic Kernel that this function definition should be sent over to OpenAI to be called.

public class RestaurantBookingPlugin

{

[KernelFunction]

public string[] GetRestaurantsAvailableToBook()

{

return

[

"McDonald's",

"Five Guys",

"Chili's",

"Ruth's Chris"

];

}

}

Next, we'll add the plugin to Semantic Kernel:

var semanticKernelBuilder = Kernel.CreateBuilder();

semanticKernelBuilder.AddOpenAIChatCompletion(model, openAIApiKey);

semanticKernelBuilder.Plugins.AddFromObject(new RestaurantBookingPlugin());

Kernel semanticKernel = semanticKernelBuilder.Build();

We'll then alter our chat completion call inside of our loop to enable automatic function calling and pass in the Kernel object so that Semantic Kernel will automatically call the function if OpenAI tells it to:

var response = await chatClient.GetChatMessageContentsAsync(

chatHistory,

new OpenAIPromptExecutionSettings

{

FunctionChoiceBehavior = FunctionChoiceBehavior.Auto()

},

semanticKernel

);

var chatResponse = response.Last();

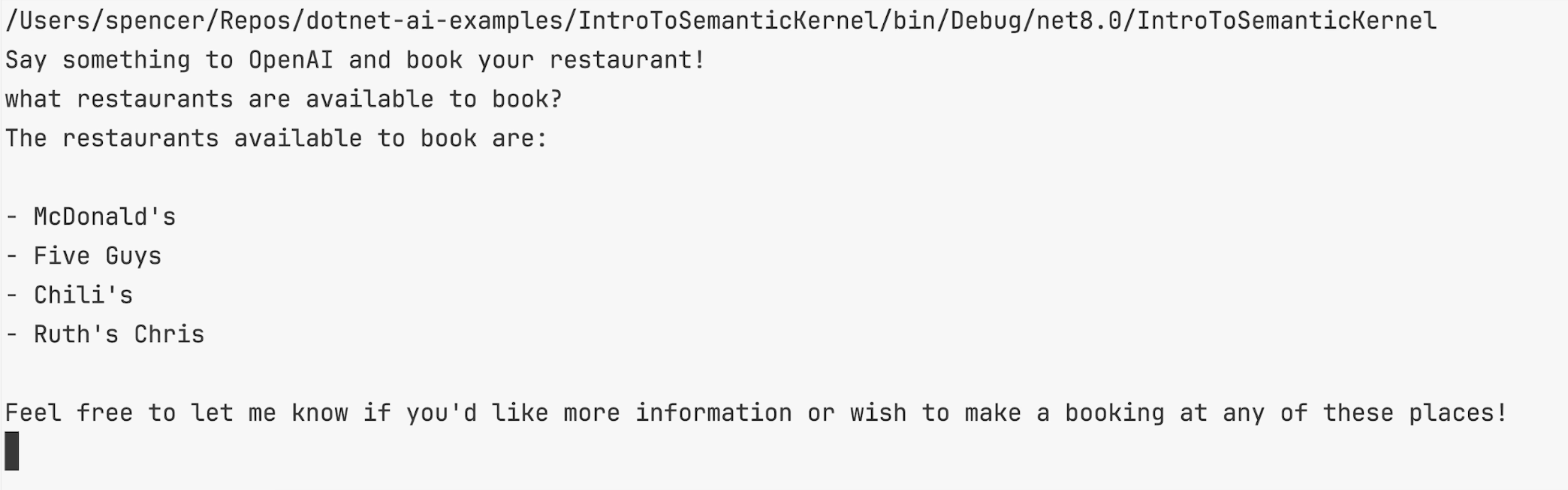

Run the solution and ask ChatGPT what restaurants are available to book. Here's what it should respond with:

That in a nutshell is how you connect your .NET code to OpenAI.

The power this can provide you is pretty immense and is the base technique for creating agentic AI systems in .NET.

In the next article in the series, we'll dive into more advanced uses of Semantic Kernel's plugin function to make OpenAI and other LLMs work even better.

Watch the Full Video on the Aviron Software YouTube Channel

Unlock the latest tech tips and insider insights on .NET, React, ASP.NET, React Native, Azure, and beyond with Aviron Software—your go-to source for mastering the tools shaping the future of development!

Looking to integrate AI & LLM features into your applications?

Aviron Software is the Official Software Consultancy founded by Spencer Schneidenbach. Aviron Software can align with your in-house team, or consultancy, to produce, test & publish custom software solutions.

To find out about timelines, costs & availability, send us a message via our contact form, or email hello@avironsoftware.com

.png)